The Next Trillion Dollar Wave - AI Narrative in Crypto: Who Are the Top Players?

Dec 12, 2024 21:40

Written by TechFlow

The AI field continues to heat up, with numerous projects striving to incorporate AI, claiming to "help AI perform better," aiming to ride the AI wave to greater heights.

While many older projects have already been discovered for their value, and newer projects like Bittensor are no longer "new," we still need to identify projects that haven't yet realized their potential but possess compelling narratives.

Improving privacy within AI projects has always been an attractive direction:

It inherently resonates with the concept of equality in decentralization.

Protecting privacy inevitably involves technologies like zero-knowledge proofs (ZK) and homomorphic encryption.

A project that combines the right narrative with sophisticated technology is likely to thrive.

But what if a serious project also includes the playful element of meme coins?

In early March, a project named BasedAI quietly registered an account on Twitter, with only two serious posts aside from retweets. The website looks extremely basic, featuring a lofty academic whitepaper.

Some influencers have already begun analyzing it, suggesting it could be the next Bittensor.

Meanwhile, its token, $basedAI, has seen an astonishing 40-fold increase since late February.

Upon studying the project's whitepaper, we discovered that BasedAI is a project combining large language models, ZK, homomorphic encryption, and meme coins.

We appreciate its narrative direction and are particularly impressed by its clever economic design, which naturally links the allocation of computing resources with the use of meme coins.

Considering that the project is still in its very early stages, this article will explore its potential to become the next Bittensor.

What Exactly Does BasedAI Do?

Before answering this question, let's take a look at who is behind BasedAI.

BasedAI was developed by an organization called Based Labs in collaboration with the founding team of Pepecoin. Their goal is to address privacy issues in the use of large language models in the AI field.

Public information about Based Labs is scarce; their website is quite mysterious, featuring only a string of tech buzzwords in a Matrix-like style (click here to visit). One of the researchers in the organization, Sean Wellington, is the author of BasedAI's whitepaper:

Additionally, Google Scholar shows that Sean graduated from UC Berkeley and has published numerous papers on clearing systems and distributed data since 2006. He specializes in AI and distributed network research, making him a prominent figure in the tech field.

On the other hand, Pepecoin is not the same as the currently popular PEPE coin. It originated as a meme in 2016 and initially had its own L1 mainnet but has since migrated to Ethereum.

You could say this is an OG meme that also understands L1 development.

But on one side, we have a serious AI science researcher, and on the other, a meme team. How do these seemingly unrelated groups come together to create sparks in BasedAI?

Putting the meme aspect aside, BasedAI's Twitter description highlights the project's narrative value:

"Your prompts are your prompts."

This emphasizes the importance of privacy and data sovereignty. When you use large language models like GPT, any prompts and information you enter are received by the server, essentially exposing your data privacy to OpenAI or other model providers.

While this may seem harmless, there are inherent privacy concerns, and you have to trust that the AI model provider won't misuse your conversation records.

Stripping away the complex math formulas and technical designs in BasedAI's whitepaper, the essence of BasedAI's mission can be understood as:

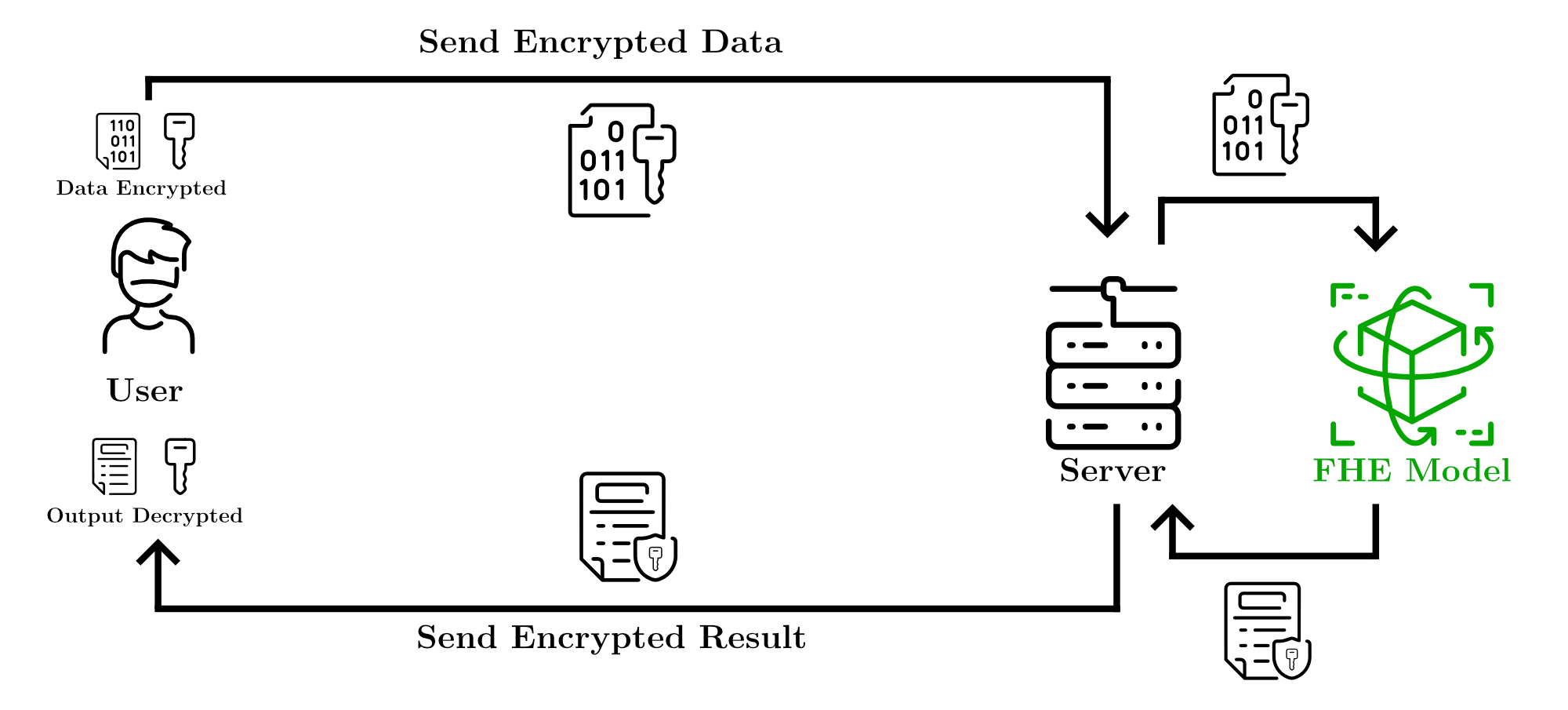

Encrypting all interactions you have with large language models, allowing the model to perform computations without exposing the plaintext, and ultimately returning results that only you can decrypt.

To achieve this, BasedAI leverages two privacy technologies: ZK (zero-knowledge proofs) and FHE (fully homomorphic encryption).

ZK allows you to prove the truth of a statement without revealing the actual data.

FHE enables computations to be performed on encrypted data.

Combining these two technologies, BasedAI allows your prompts to be encrypted when submitted to the AI model, which then returns an answer that only you can decrypt, with no intermediary knowing your questions or the responses.

While this sounds great, there's a critical issue: FHE consumes a lot of computational resources and time, leading to inefficiencies.

On the other hand, large language models like GPT require quick response times for user interactions. How can BasedAI balance computational efficiency and privacy protection?

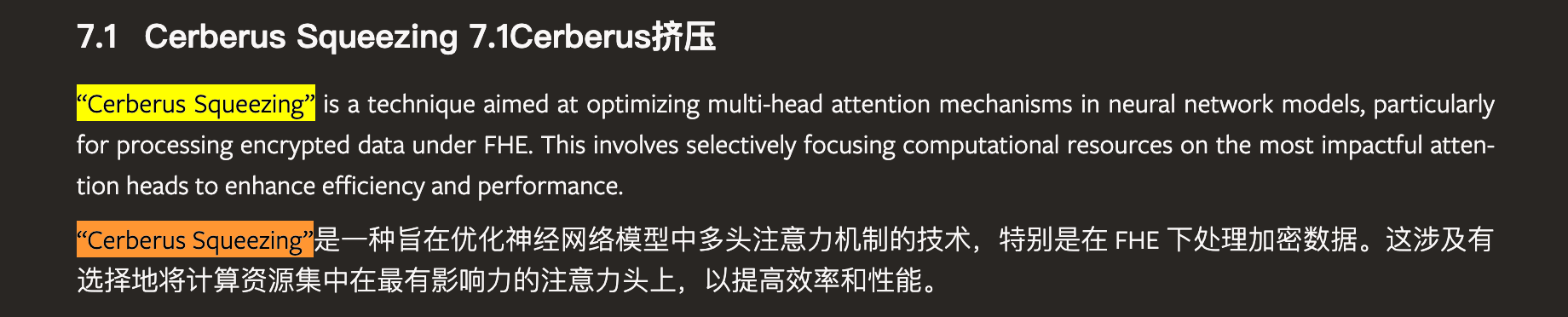

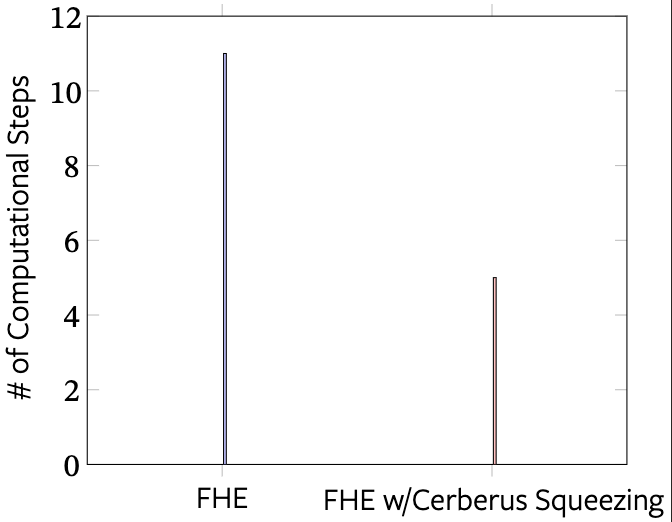

BasedAI addresses this in its whitepaper by introducing the “Cerberus Squeezing”, backed by complex mathematical formulas to optimize the efficiency of FHE.

We can't professionally evaluate the mathematical implementation of this technique, but its purpose can be simply understood as:

Optimizing the efficiency of processing encrypted data within FHE by selectively focusing computational resources on the most impactful areas to quickly complete calculations and display results.

The whitepaper also provides data demonstrating the efficiency improvements brought by this optimization:

With Cerberus Squeezing, the computational steps required for fully homomorphic encryption can be nearly halved.

Let's quickly simulate a typical user workflow with BasedAI:

A user inputs a prompt, asking to analyze the emotions displayed in someone's conversation record while wanting to protect the privacy of the record.

The user submits this data in an encrypted form through the BasedAI platform and specifies the AI model to use (e.g., an emotion analysis model).

Miners in the BasedAI network receive the task and use their computational resources to run the specified AI model on the encrypted data.

The network nodes complete the computation without decrypting the data and return the encrypted results to the user.

The user decrypts the results with their private key to get the needed data analysis.

Beyond the technology, what specific roles exist within the BasedAI network to execute the technology and meet user needs?

First, we need to introduce the unique concept of "Brains."

In AI encryption projects, there are typically several key elements:

Miners: Responsible for executing computational tasks and consuming computing resources.

Validators: Verify the correctness of the miners' work and ensure the validity of transactions and computational tasks within the network.

Blockchain: Records the results of executed computations and validation tasks in the ledger, incentivizing various roles through native tokens.

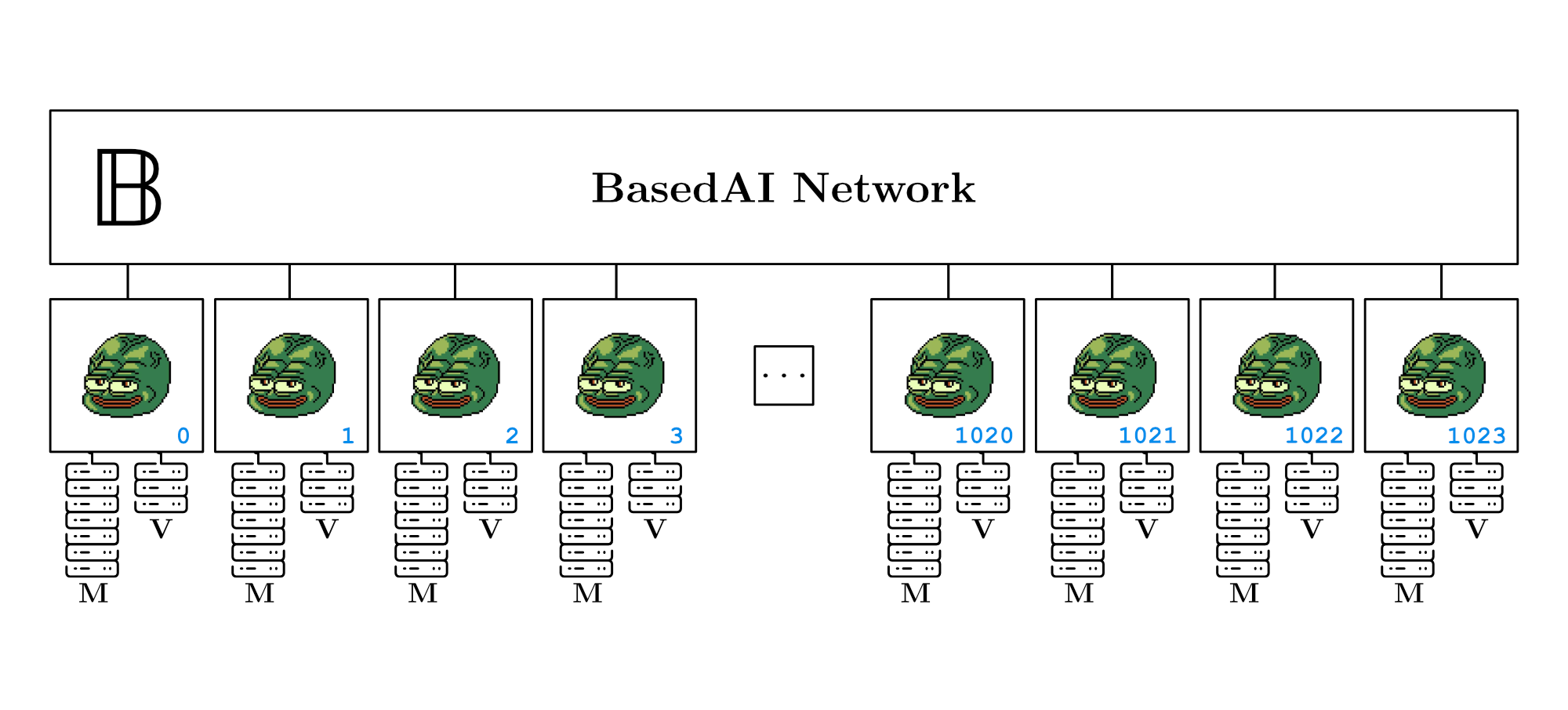

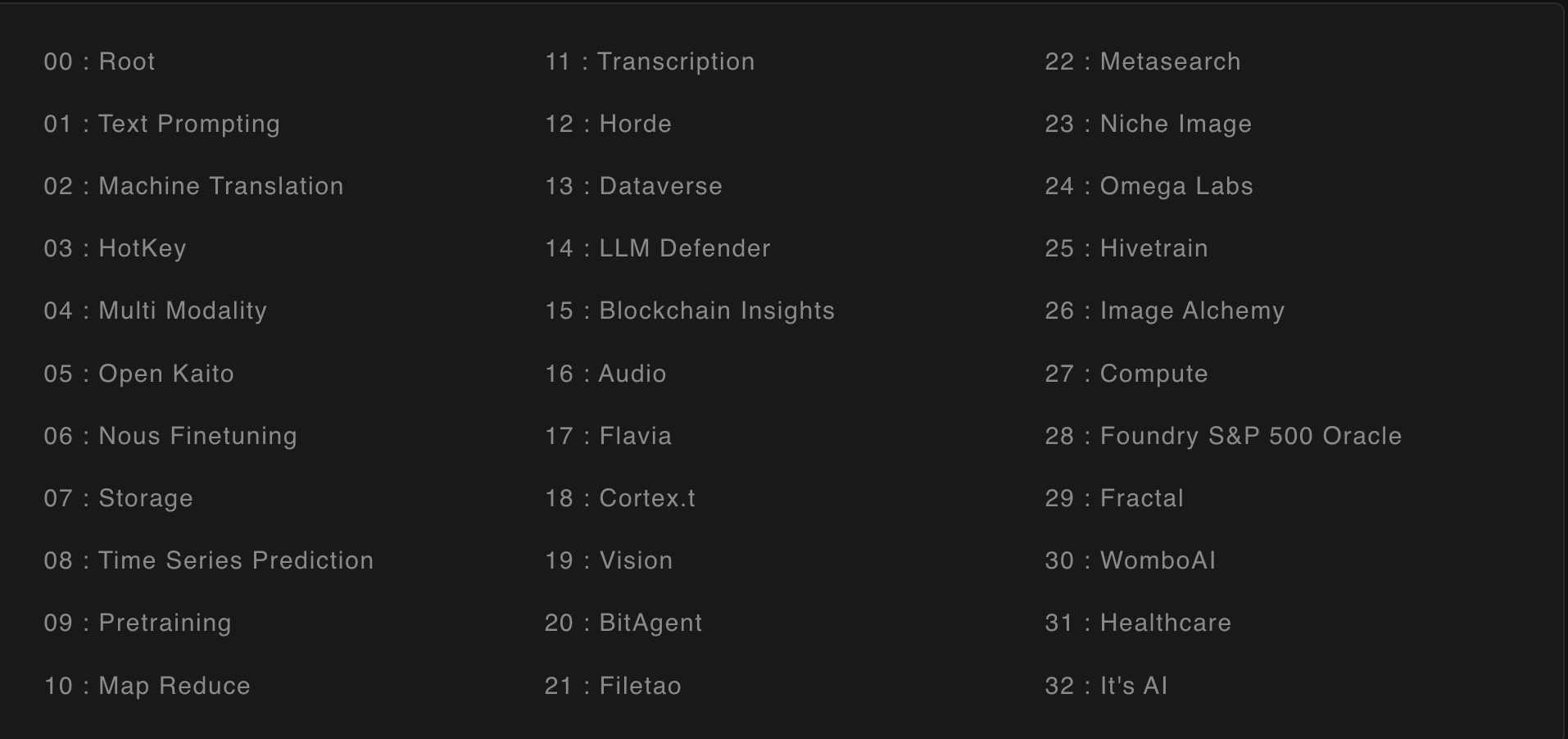

BasedAI builds upon these three elements by introducing the concept of "Brains":

"You need a Brain to incorporate the computing resources of miners and validators, enabling these resources to compute and complete tasks for different AI models."

Simply put, these "Brains" act as distributed containers for specific computational tasks, used to run modified large language models (LLMs). Each "Brain" can choose which miners and validators it wants to be associated with.

If this explanation seems abstract, you can think of owning a Brain as having a "cloud service license":

If you want to gather a group of miners and validators to perform encrypted computations for large language models, you need to hold an operational license. This license specifies:

Your operating address (ID)

Your scope of operations (e.g., emotion analysis, text-to-image generation, medical assistance using AI)

Your available computing resources and their capacity

The specific people you have recruited

The potential rewards for performing these tasks

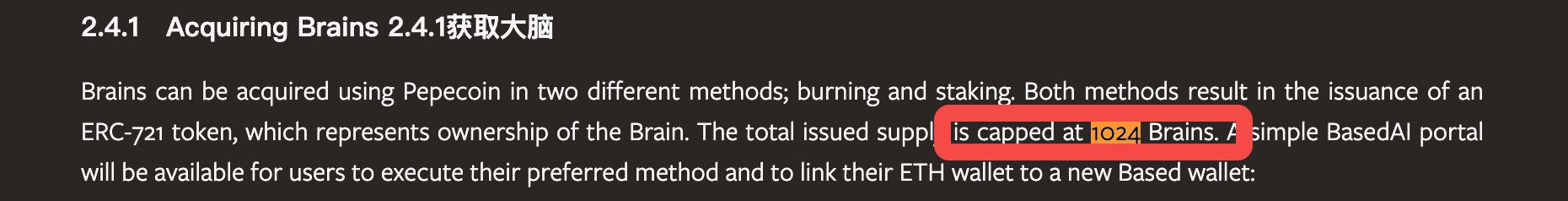

From BasedAI's whitepaper, we can see that each "Brain" can accommodate up to 256 validators and 1792 miners, with a total of only 1024 Brains in the system, adding to their scarcity.

For miners and validators to join a Brain, they need to do the following:

Miners: Connect to the platform, decide the amount of GPU resources to allocate (which are more suitable for computation), and deposit $BASED tokens to start computational work.

Validators: Connect to the platform, decide the amount of CPU resources to allocate (which are more suitable for validation), and deposit $BASED tokens to begin validation work.

The more $BASED tokens miners and validators deposit, the more efficiently they can operate within a Brain, and the more $BASED rewards they can earn.

Clearly, a Brain represents a certain level of authority and organizational structure, which opens up opportunities for token and incentive design (to be detailed later).

Does this Brain design seem familiar?

In Bittensor, different Brains are somewhat similar to subnets, each performing specific tasks using different AI models.

In the previous cycle, Polkadot made use of a similar concept where different "Brains" are akin to "slots" that run parallel chains, each executing different tasks.

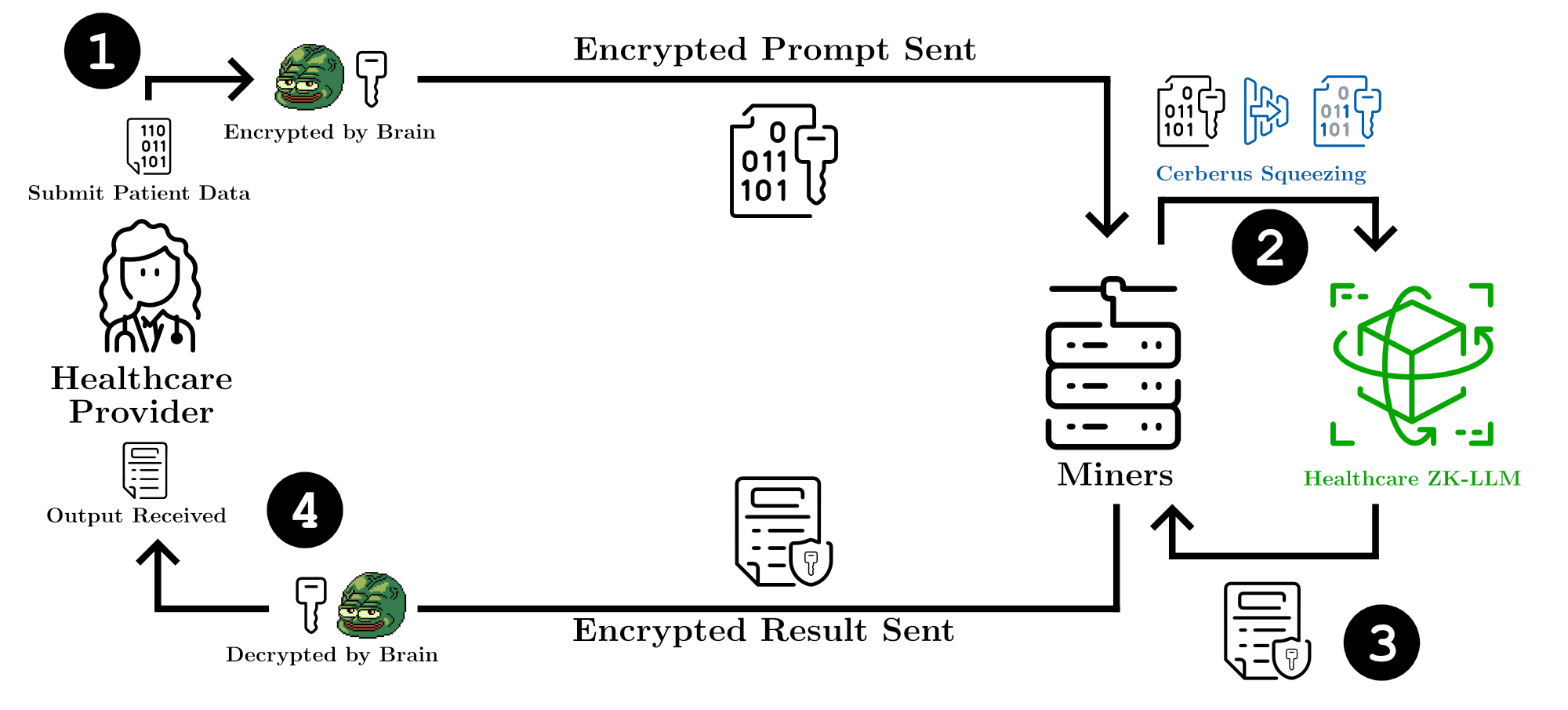

BasedAI has also provided an illustration of a "Medical Brain" performing tasks:

Patient medical records are encrypted and submitted to the Medical Brain, generating prompts to ask for appropriate diagnostic suggestions.

The relevant large language model in the BasedAI network, with the help of ZK and FHE, processes the encrypted data without decrypting the sensitive patient information. This step utilizes the computational resources of miners and validators.

Healthcare providers receive the encrypted output from the BasedAI network. Only the submitting user can decrypt the results to obtain the treatment suggestions, ensuring that the data remains protected throughout the process and is not exposed or leaked.

How do you obtain a Brain, or the license to start encrypted AI model computations?

BasedAI has creatively partnered with Pepecoin to sell these permissions, giving Pepecoin, the MEME token, additional utility.

There are only 1024 Brains available, so the project naturally uses NFT minting. Each Brain sold generates a corresponding ERC-721 token, which can be seen as a license.

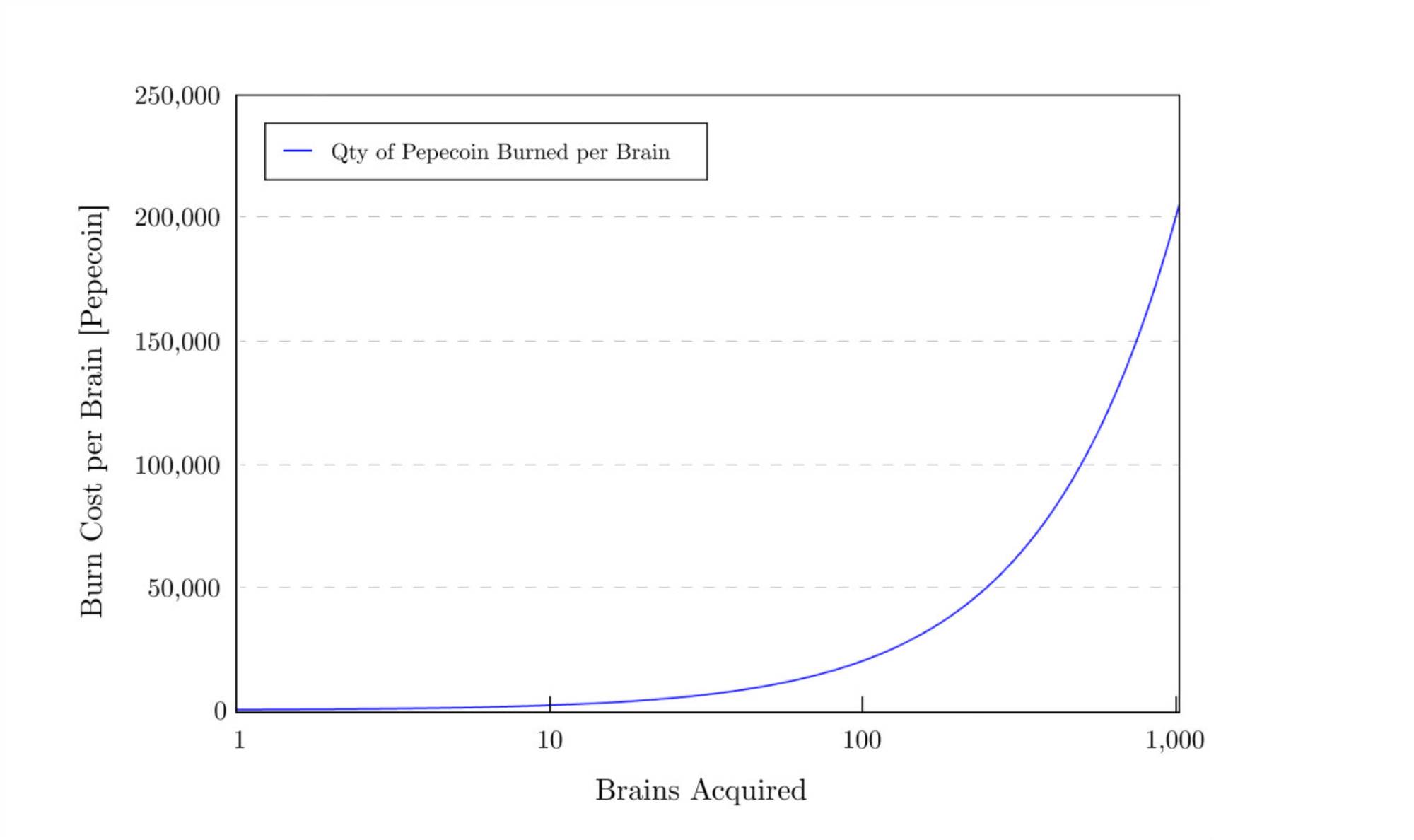

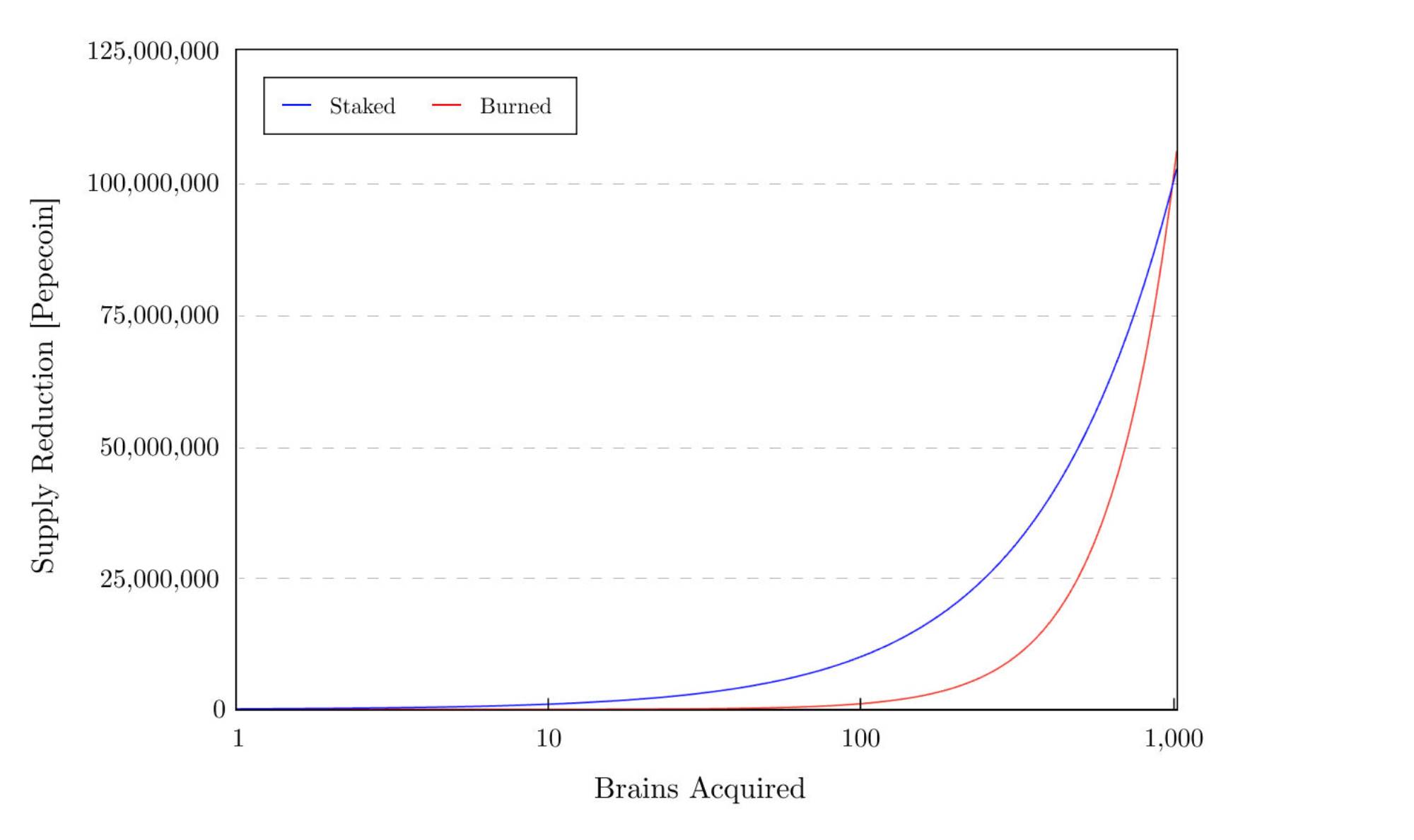

To mint this Brain NFT, you need to perform one of two Pepecoin-related actions: burn or stake Pepecoin.

For burning, the first Brain requires users to spend 1000 Pepecoin to mint.

Each subsequent minting increases the cost by 200 Pepecoin.

Brains created this way are transferable.

If all Brains are acquired through burning, a total of 107,563,530 Pepecoin will be permanently destroyed. (According to CMC data, the current circulating supply is 133M, so if this burn is fully realized, it would reduce the token supply by almost 80%.)

For staking:

Users are required to stake 100,000 Pepecoin for a period of 90 days.

The Brain ERC-721 NFT is issued immediately after staking.

Brains generated this way are non-transferable but gradually earn $BASED, the project's native token, as a reward.

After 90 days, the stake can be withdrawn.

Whether through burning or staking, as more Brains are created, the corresponding amount of Pepecoin will either be burned or locked, depending on the participation ratio of the two methods.

Clearly, this is less about allocating AI resources and more about distributing crypto assets.

Due to the scarcity of Brains and the token rewards they generate, the demand for Pepecoin will significantly increase when creating a Brain. Both staking and burning will reduce the circulating supply of Pepecoin, which theoretically benefits its secondary market price.

As long as the number of issued and active Brains in the ERC-721 contract is below 1024, the BasedAI Portal will continue to issue Brains. Once all 1024 Brains are distributed, no new Brains can be created.

An Ethereum address can hold multiple Brain NFTs. The BasedAI Portal will allow users to manage the rewards earned from all the Brains associated with their connected ETH wallet. Active Brain owners are expected to earn between $30,000 to $80,000 per Brain annually, according to the official whitepaper.

Given these economic incentives, combined with the narrative of AI and privacy, it's easy to foresee the high demand for Brains once they officially launch.

In crypto projects, the technology itself isn't the ultimate goal. It's meant to capture attention, driving asset allocation and flow.

BasedAI's Brain design shows they've mastered asset distribution. By emphasizing data privacy, they've turned AI computation resources into a form of permission, created scarcity for this permission, and directed assets into it, boosting demand for another meme token.

Computing resources are well allocated and incentivized, the project's "Brain" assets gain both scarcity and attention, and the meme coin's circulating supply is reduced.

From an asset creation perspective, BasedAI's design is sophisticated and clever.

However, addressing the unspoken, often avoided questions:

How many people will actually use this privacy-protecting large language model? How many major AI companies will be willing to adopt such privacy-focused technology that may not serve their interests?

The answers might not be very optimistic.

Nevertheless, with the narrative gaining momentum, it's a ripe time for speculation. Sometimes, the key is not to question whether there is a viable path but to go with the flow.

References:

Recommendation

When 9 of the Top 100 Market Caps Are Memes, Embracing Attention Investing Is Clearly the Way Forward

Jul 07, 2024 14:12

dYdX Chain: From dApp to Application Chain Ecosystem, an Established DeFi Aims to Create a More Competitive Product Than CEX

Jun 06, 2024 22:32

Understanding Bittensor (TAO): The Ambitious AI Lego Making Algorithms Modular

Jun 06, 2024 22:02